Geocode Addresses with PostGIS and Tiger/LINE

My previous post, Setup Geocoder with PostGIS and Tiger/LINE, prepared a PostGIS geocoder with TIGER/Line data for Colorado. This post uses that setup to bulk geocode addresses from OpenStreetMap buildings to try to determine the accuracy of the geometry data derived from the input addresses.

Quality Expectations

Let's get this out of the way: No geocoding process is going to be perfectly accurate.

There are a variety of contributing factors to the data quality. The geocoder data source is pretty decent, coming from the U.S. Census Bureau's TIGER/Line data set. The vintage is 2022, and this is as recent as I can load today using this data source. We should to understand that this will not contain any new development or changes from the past year (or so).

The OpenStreetMap data is also a source of error. It is certain that there are typos, outdated addresses, and other inaccuracies from the OpenStreetMap data. Some degree of that has to be expected with nearly any data source. The region of OpenStreetMap used will also be an influence, some regions just don't have many boots-on-the-ground editing and validating OpenStreetMap data.

Setup Geocoder with PostGIS and Tiger/LINE

Geocoding addresses is the process of taking a street address and converting it to its location on a map. This post shows how to create a PostGIS geocoder using the U.S. Census Bureau's TIGER/Line data set. This is part one of a series of posts exploring geocoding addresses. The next post illustrates how to geocode in bulk with a focus on evaluating the accuracy of the resulting geometry data.

Before diving in, let's look at an example of geocoding.

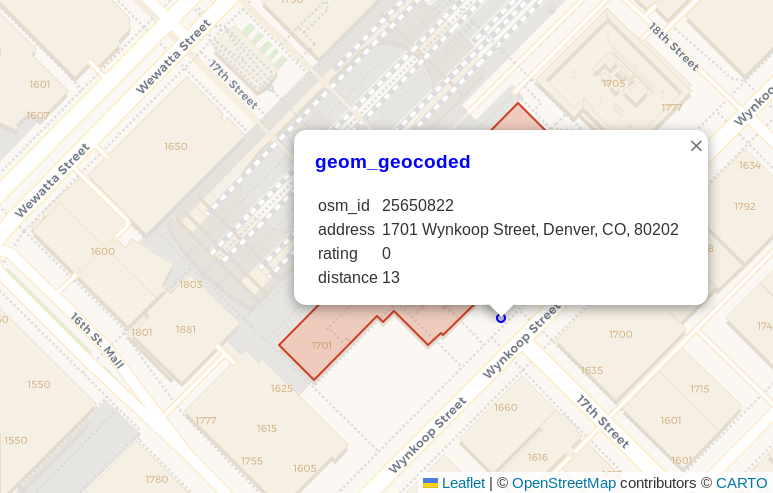

The address for Union Station

(see on OpenStreetMap)

is 1701 Wynkoop Street, Denver, CO, 80202.

This address was the input to geocode.

The blue point shown in the following screenshot

is the resulting point from the

PostGIS geocode()

function. The pop-up dialog shows the address, a rating of 0,

and the calculated distance away from the OpenStreetMap polygon representing that address (13 meters), shown in red under the pop-up

dialog.

PostgreSQL 16 improves infinity: PgSQLPhriday #012

This month's #pgsqlphriday challenge is the 12th PgSQL Phriday, marking the end of the first year of the event! Before getting into this month's topic I want to give a shout out to Ryan Booz for starting #pgsqlphriday. More importantly though, a huge thank you to the hosts and contributors from the past year! I looked forward to seeing the topic each month followed by waiting to see who all would contribute and how they would approach the topic.

Check out pgsqlphriday.com for the full list of topics, including recaps from each topic to link to contributing posts. This month is the 7th topic I've been able to contribute to the event. I even had the honor of hosting #005 with the topic Is your data relational? I'm really looking forward to another year ahead!

Now returning to your regularly scheduled PgSQL Phriday content.

This month, Ryan Booz chose the topic: What Excites You About PostgreSQL 16? With the release of Postgres 16 expected in the near(ish) future, it's starting to get real. It won't be long until casual users are upgrading their Postgres instances. To decide what to write about I headed to the Postgres 16 release notes to scan through the documents. Through all of the items, I picked this item attributed to Vik Fearing.

- Accept the spelling "+infinity" in datetime input

The rest of this post looks at what this means, and why I think this matters.

Load the Right Amount of OpenStreetMap Data

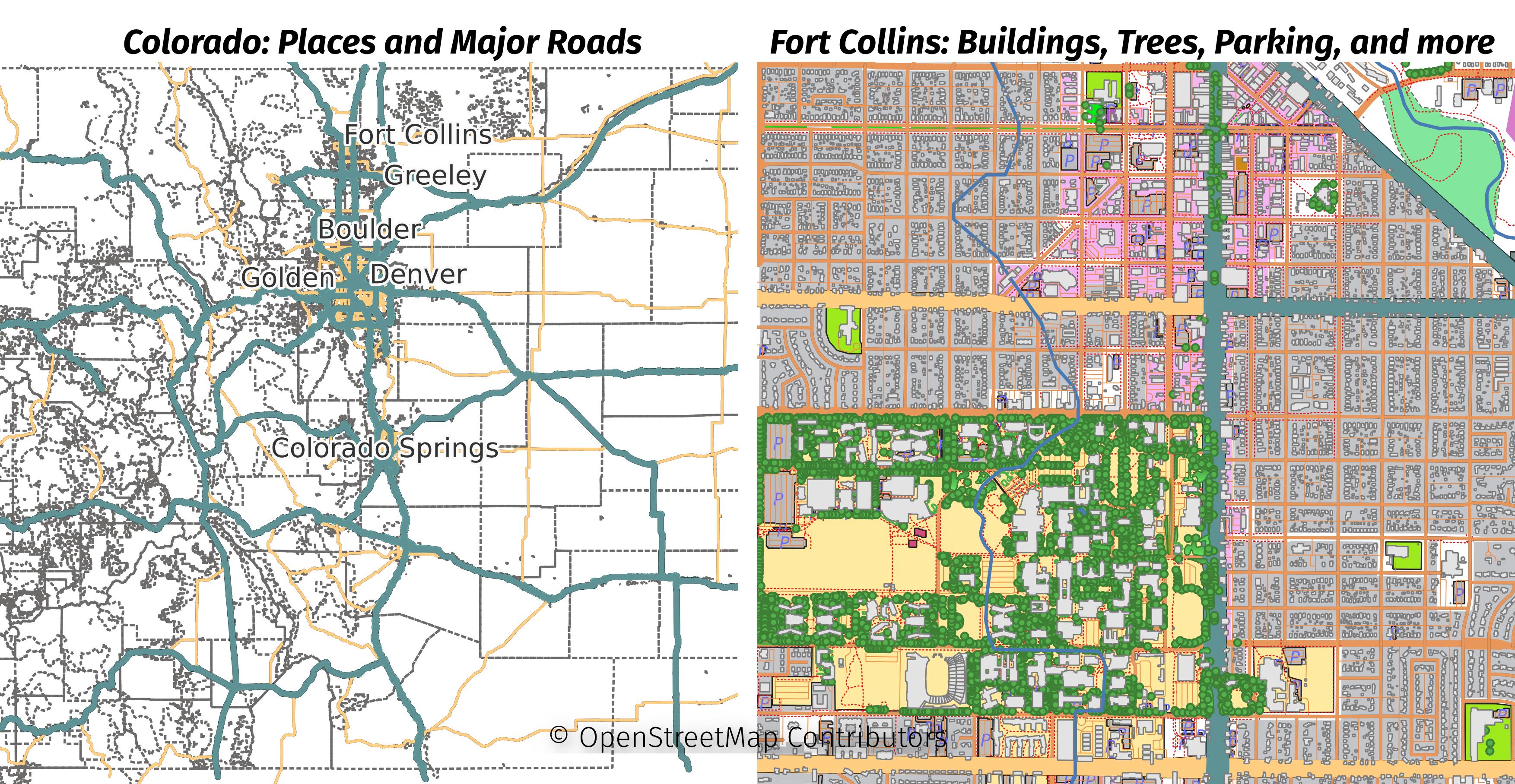

Populating a PostGIS database with OpenStreetMap data is favorite way to start a new geospatial project. Loading a region of OpenStreetMap data enables you with data ranging from roads, buildings, water features, amenities, and so much more! The breadth and bulk of data is great, but it can turn into a hinderance especially for projects focused on smaller regions. This post explores how to use PgOSM Flex with custom layersets, multiple schemas, and osmium. The goal is load limited data for a larger region, while loading detailed data for a smaller, target region.

The larger region for this post will be the Colorado extract from Geofabrik. The smaller region will be the Fort Collins area, extracted from the Colorado file. The following image shows the data loaded in this post with two maps side-by-side. The minimal data loaded for all of Colorado is shown on the left and the full details of Fort Collins is on the right.

Getting started with MobilityDB

The MobilityDB is an exciting project I have been watching for a while. The project's README explains that MobilityDB "adds support for temporal and spatio-temporal objects." The spatio-temporal part translates as PostGIS plus time which is very interesting stuff to me. I had briefly experimented with the project in its early days and found a lot of potential. Mobility DB 1.0 was released in April 2022, at the time of writing the 1.1.0 alpha release is available.

This post explains how I got started with MobilityDB using PostGIS and pgRouting. I'm using OpenStreetMap roads data with pgRouting to generate trajectories. If you have gpx traces or other time-aware PostGIS data handy, those could be used in place of the routes I create with pgRouting.

Install MobilityDB

When I started working on this post a few weeks ago I had an unexpectedly

difficult time trying to get MobilityDB working.

I was trying to install from the production branch with Postgres 15 and Ubuntu 22.04

and ran into a series of errors. It turned out the fixes

to allow MobilityDB to work with these latest versions had been in the develop

branch for more than a year.

After realizing what the problem was I asked a question and got an answer. The fixes are now in the master branch

tagged as 1.1.0 alpha.

Thank you to everyone involved with making that happen!

To install MobilityDB I'm following the instructions to install from source. These steps involve git clone

then using cmake, make, and sudo make install.

Esteban Zimanyi explained

they are working on getting packaging worked out for providing deb and yum installers.

It looks like work is progressing on those!

Update Configuration

After installing the extension, the postgresql.conf needs to be updated to include

PostGIS in the shared_preload_libraries and increase the max_locks_per_transaction

to double the default value.

shared_preload_libraries = 'postgis-3'

max_locks_per_transaction = 128