GIS - It's All About the Data

When you’re working with GIS, it’s all about the data. Once you have the spatial data to work with, you need to clean and manage it in order to effectively use it, and that has been my biggest challenge when working with spatial data. This post attempts to share some of my experiences with a couple systems for storing and managing spatial data.

If you are using PostGIS, see my PostGIS: Table Your Spatial data posts. Part 1 shows how to improve performance with large polygons, part 2 shows how to reduce many small objects.

The Beginning

My first experiences with working with GIS data came to me about four years ago. I had just started at Front Range Community College, and among my research and database roles I was responsible for using ArcGIS Desktop to create maps and other spatial analysis work. I was excited to have the industry-standard mapping software at my disposal and my first few experiences were great. I jumped in and worked through the ArcGIS training book we had available. The examples, like most training, were contrived and simplified so I whizzed through them, going from cover to cover of the training book in mere days.

Frustration Replaced Infatuation

Once the initial learning phase was behind me I tried to apply my new found knowledge. I booted up ArcGIS (which is slow to start), grabbed some internal data and off I went, trying to accomplish some real work instead of completing tutorials. At this point, my love affair was already wearing off. While I still felt like I was generating some cool output, the going was slow and the output wasn’t as good as I had imagined. It only took me a couple weeks to really start feeling this effect sink in.

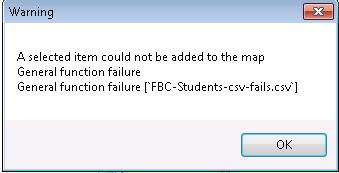

I started blaming the software for all of my woes. To be fair to myself, it’s hard to not blame the software when it gives error messages like this:

That error message can be replicated by trying to import a true CSV file. You know, the one that’s separated with the comma (,) character implied by the “C” of CSV? Pretty standard format, but ArcGIS Desktop can’t handle it apparently. What ArcGIS wants is a tab delimited file… why don’t you just tell me that?! You would have saved me hours of time troubleshooting because I couldn’t figure out what “general function failure” was supposed to mean.

Further, ArcGIS is SLOOOW. The computer that powers my ArcGIS installation has 4 cores and 8 GB of RAM. Still, loading tools from the Toolbox, such as to Geocode, typically takes at least 10 seconds to open. It’s just a prompt-box, why does it take SO LONG to load on modern hardware?

Expanding my Learning

A few months ago I started investigating other solutions for creating maps. It started when I stumbled upon Zain Memon’s fantastic presentation about making maps in Python which led me to more and more videos... my GIS infatuation had returned! I quickly started exploring software options for GIS-related work (including ArcGIS) and wrote this post that was followed by another fun project.

Then it hit me...

GIS Data Is Data!

As a data professional, I’m not sure why it took me so long to realize this… but GIS data is still just data! Maybe it’s the way ArcGIS obscures the raw data from the user, maybe that’s just me. I know you can open the data table but the volume of the data typically renders that functionality rather useless. The attribute table doesn’t let you do a SELECT TOP 10 type query to just see what the data looks like, and advanced querying is painful or impossible. Adding calculated columns is painful at best, and any tool that you need to run for the toolbox is a crap-shoot of trying to get it to run without a cryptic error. Finally, navigating the bloated UI is downright annoying. As I’ve said before:

By navigating through the 11 tabs (11?!) you can eventually style each layer as you choose.

Spatial Data: Welcome Home!

Now meet PostgreSQL paired with PostGIS. With the proper tools, spatial data wasn’t so difficult to manage. I can query it, update it, or even delete it directly using a friendly, well documented language. I’ll admit, I am biased towards relational databases, but spatial data is perfect for relational databases. Especially when the relational database has the absolute best spatial data support via PostGIS!

Want to find spatial data that overlaps? Maybe you want to find something within a distance of somewhere else. Or you could want to generate a buffer around a large number of features. All of that is a breeze using the spatial queries.

Data can then be optimized using GIST indexes

to help make these queries lightning fast. It’s simple and quick to query to find a subset of data based on an attribute or spatial relationship, and use that to create a new datasource. But, why would you want to do that?

Reduce Your Data

GIS data gets big – the OpenStreetMap project has proved that with their full download weighing in at 40 GB when compressed! It’s common sense that the more data you’re working with, the slower things will go. Taking those two tidbits into account, it’s safe to say that querying a small amount of spatial data will be quicker than querying a large amount of spatial data.

Have large polygon data? My post PostGIS: Tame your spatial data Part 1 can help!

Here’s a practical example. When I’m working with maps, I always want to be able to zoom out to the state level (such as Colorado) and I almost always want to display the Interstates and major highways for reference points. Since I’m already using OSM roads data to display the fine details I want to use the same basic dataset for that high-level view too. The problem is, for Colorado alone that’s almost 400,000 rows of roads data. That translates into the software working really hard to find and format the small amount of desired data.

My solution was to create a query in PGAdminIII pgAdmin 4 and create a brand-new table with exactly (and only!) what that layer needs. I filtered down to just the roads I want and merged the tiny segments of road together using

ST_Union.

All in one quick query. The resulting table contains 239 rows in it and any query against it is lightning fast. Managing your data to return the smallest dataset possible is one of the best thing to do to increase GIS performance.

Read about pgAdmin 4's fantastic new feature: geometry viewer!

When I attempted the same basic operation in ArcGIS there were two negatives I encountered. First was speed, second was quality. What PostgreSQL accomplished in a few seconds, ArcGIS took a few minutes. That wasn’t a deal breaker, but the output data quality was. For some reason the ArcGIS operation (I can’t remember exactly what it’s called right now) left some funny gaps in the roads when rendered. I suspect it has to do with the precision of the merge operation along with data quality issues, but PostGIS handled it just fine. Since PostGIS did it better and quicker, I just decided to give up getting the same results from ArcGIS.

PostGIS vs. GeoDatabase

In terms of performance related to spatial data storage, management and retrieval I believe that PostGIS is far superior to the GeoDatabase format. The speed of loading, retrieving and manipulating data is exactly what you would expect from PostgreSQL. The speed of the same tasks using ArcCatalog into GeoDatabase format is nothing to write home about.

Being a SQL nerd I am right at home managing data using a small amount of code. I find it much easier than using the ArcGIS Toolbox, which is slow and provides terrible user feedback when something goes wrong. (See above screenshot)

Getting started with PostGIS does have a much steeper learning curve than getting started with ArcGIS and the Geodatabase format. If you’re tech-shy, PostGIS may not be for you. For me, that wasn’t an obstacle and I believe that it worth every headache throughout the learning curve. There is no perfect solution, and each has pros and cons.

Hopefully this post helped identify what I found to be good and bad to help you decide what’s best for you!