Testing PostgreSQL Unlogged Tables for Performance

PostgreSQL has a feature called UNLOGGED tables.

I became intrigued by UNLOGGED tables after reading

this post.

According to the docs:

"Data written to unlogged tables is not written to the write-ahead log (see Chapter 29), which makes them considerably faster than ordinary tables. However, they are not crash-safe: an unlogged table is automatically truncated after a crash or unclean shutdown."

It's important to note that unlogged tables shouldn't be used for storing critical data. My use case for them is to quickly load data to staging tables before cleaning and reorganizing. I end up importing large amounts of data on a regular basis, so this is of interest to me. I decided to test regular tables vs unlogged tables by importing 6 months of NOAA's hourly weather data. You can read about my previous tests with this data here.

Hardware and Setup

As I typically do, I'm using a VPS from Digital Ocean's $5/mo offering. It provides a single core, 512 MB RAM, and to make room for the file sizes I'm working with I'm taking advantage of Digital Ocean's newest feature: block storage. I'm deploying PostgreSQL 9.3 in Docker.

Preparing the Data Set

The first step is to download a few files from

NOAA's site.

I'm pulling the first 6 months of data for 2016. Each .zip file is roughly 80M, unzipped over 700MB.

mkdir ~/noaa && cd ~/noaa

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201606.zip

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201605.zip

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201604.zip

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201603.zip

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201602.zip

wget http://www.ncdc.noaa.gov/orders/qclcd/QCLCD201601.zip

Extract all of the zip files in the ~/noaa/ directory.

unzip \*.zip

I want to create a single file so I can just run a single copy statement. I start by pulling the header row from one file, then adding each files data in.

# Create the header for the file...

head -n 1 201601hourly.txt > hourly_2016_01_06.txt

# Skip the header line of each file

tail -n +2 201601hourly.txt >> hourly_2016_01_06.txt

tail -n +2 201602hourly.txt >> hourly_2016_01_06.txt

tail -n +2 201603hourly.txt >> hourly_2016_01_06.txt

tail -n +2 201604hourly.txt >> hourly_2016_01_06.txt

tail -n +2 201605hourly.txt >> hourly_2016_01_06.txt

tail -n +2 201606hourly.txt >> hourly_2016_01_06.txt

This leaves me with 3.2 GB of data in the hourly_2016_01_06.txt file. This will be what I use

to test importing data into PostgreSQL, both with and without the UNLOGGED option.

First Test Run

I created the PostgreSQL instance usage a custom PostgreSQL Docker image and expose a port so

I can explore the data using PgAdminIII on my local computer. By using --rm, Docker will cleanup

(aka DELETE!)

the data after I'm done with it. The COPY command requires the file is available to the local server,

so I attach the ~/noaa directory on the VPS to the /tmp directory within the Docker container.

Don't use this in production!

You can see the basics of

how I create this image here.

sudo docker run -it --rm -p 7765:5432 -v ~/noaa:/tmp rustprooflabs/postgresql93

Create the database.

psql -U ryanlambert -h localhost -p 7765 -d postgres -c "CREATE DATABASE noaa WITH ENCODING = 'UTF8' TEMPLATE Template0"

Create the table. The code is ugly, but so is the raw data. This is only a staging table so I'm

setting all columns to accept anything that fits within TEXT.

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "CREATE TABLE hourly_noaa

(WBAN text, Date text, Time text, StationType text, SkyCondition text, SkyConditionFlag text, Visibility text, VisibilityFlag text, WeatherType text, WeatherTypeFlag text, DryBulbFarenheit text, DryBulbFarenheitFlag text, DryBulbCelsius text, DryBulbCelsiusFlag text, WetBulbFarenheit text, WetBulbFarenheitFlag text, WetBulbCelsius text, WetBulbCelsiusFlag text, DewPointFarenheit text, DewPointFarenheitFlag text, DewPointCelsius text, DewPointCelsiusFlag text, RelativeHumidity text, RelativeHumidityFlag text, WindSpeed text, WindSpeedFlag text, WindDirection text, WindDirectionFlag text, ValueForWindCharacter text, ValueForWindCharacterFlag text, StationPressure text, StationPressureFlag text, PressureTendency text, PressureTendencyFlag text, PressureChange text, PressureChangeFlag text, SeaLevelPressure text, SeaLevelPressureFlag text, RecordType text, RecordTypeFlag text, HourlyPrecip text, HourlyPrecipFlag text,Altimeter text ,AltimeterFlag text);"

Now to load the big NOAA file.

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa FROM '/tmp/hourly_2016_01_06.txt' DELIMITER ',' CSV;"

This took just over five minuets (302 seconds) to load just over 26 million rows of data. This worked out to be roughly 86,000 rows per second. Not too bad on a budget server. My previous testing on a local VM and a slow HDD was a mere 46,000 rows per second. While the raw .txt file was a little over 3 GB, the database size has grown to roughly 5 GB.

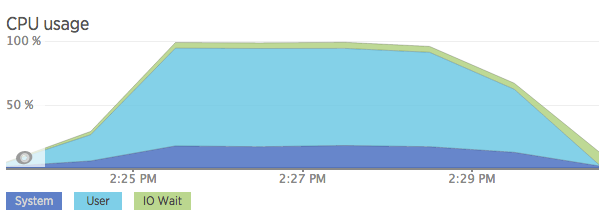

Last, looking at New Relic's CPU chart for the time of execution, you can see that the CPU is a bottleneck in the process. You can also see the IO wait creeping in which I haven't seen on my DO servers until I started using their Block Storage. I think there is some extra latency with the way those drives attach over the network, but haven't done enough testing to confirm.

Test Run - UNLOGGED

I created the database same as above, but the create table command has changed. The only difference is that:

`CREATE TABLE ...`

Becomes:

`CREATE UNLOGGED TABLE ...`

Now to load the data into the new unlogged table.

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged FROM '/tmp/hourly_2016_01_06.txt' DELIMITER ',' CSV;"

Loading to the unlogged table took 3 minutes and 17 seconds (197 seconds), providing a 35% reduction in execution time. We're now up to 132,000 rows/second. Further, the final database size ended up almost 1 GB smaller due to the lack of writing to the WAL. I'm not including the CPU chart for this run because it looked pretty much like the one above, just with a shorter curve. Because the CPU seems to be a definite bottleneck at this point, I want to try again with more cores.

Take 2 with Bigger Hardware

For this set of tests I created a VPS at the $80/month ($0.119/hr) level. This gives me 4 CPUs and 8 GB RAM. I start again with the traditional table, like the first test above, which ran in 3 minutes and 20 seconds. That's almost as fast as the unlogged table on the budget server. I then repeat the test with the unlogged table and was a bit disappointed. It took 2 minutes and 43 seconds to run, 23% faster than the regular table. There's still a benefit with my initial approach, but not as significant as when I was running with less power.

After a bit of poking around I remember that each session in PostgreSQL is single threaded, so to take full advantage of the multiple cores I decide to go back to the individual monthly files and load each month in a separate session. Because I'm already setting up multiple connections, I decide to see how fast I can make it by also setting up file to load into a dedicated table.

# Each command is exectued in a separate tab in terminal...

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged1 FROM '/tmp/201601hourly.txt' DELIMITER ',' CSV;"

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged2 FROM '/tmp/201602hourly.txt' DELIMITER ',' CSV;"

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged3 FROM '/tmp/201603hourly.txt' DELIMITER ',' CSV;"

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged4 FROM '/tmp/201604hourly.txt' DELIMITER ',' CSV;"

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged5 FROM '/tmp/201605hourly.txt' DELIMITER ',' CSV;"

psql -U ryanlambert -h localhost -p 7765 -d noaa -c "COPY hourly_noaa_unlogged6 FROM '/tmp/201606hourly.txt' DELIMITER ',' CSV;"

Running 6 simultaneous sessions ran in 53 seconds, loading roughly 500,000 rows per second! This provided a 74% reduction in execution time compared to the regular table. Obviously this is more work to configure and maintain than running in a single process, but if you have high performance requirements to load lots of data, it may be worth the effort. Just using unlogged tables along can have a noticeable impact on load times to staging, but adding in the benefit of multiple cores can really improve performance.

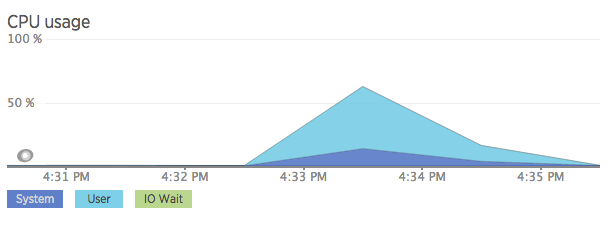

The screen shot below shows that the latest run didn't fully strain the CPU now that I'm using 4 cores instead of one.

Caveat

My PostgreSQL Docker image has been optimized to run on the budget server. I suspect that if I worked to optimize the configuration I could probably squeeze even more performance out of it.

Summary

Unlogged tables can provide a major performance boost if you need to load large amounts of data. On the budget server it reduced execution time by almost 35%, while on the more powerful server and by utilizing multiple cores I was able to reduce load times by almost 75%.

Loading data to a staging table is a perfect example of when to use this feature. What I've shown in this post is just loading to staging, you would still want to work up a production table with proper data types and indexes added.