"Dockerizing" PostgreSQL

Warning: This post is outdated. It is here for reference purposes only.

Early on in 2015 I was working on a couple different side projects that encouraged

me to start using Docker.

The projects were using Flask WSGI applications for the UI with PostgreSQL

as the data storage layer. Docker was added to my work flow to make the deployment

of Python WSGI applications easier and reduce the dependency headache that

virtualenv tries to solve, but doesn't.

Once I started using Docker in that use case I immediately saw potential benefits

in other areas... Specifically in deploying PostgreSQL databases.

This road has been filled with bumps and potholes, but the end result is a well tested, flexible and powerful environment. In this post I will walk through how I have "Dockerized" PostgreSQL 9.3 and explain some of the pros and cons to doing so.

Docker != Golden Ticket

Before getting to the good stuff, let's make sure we're clear on Docker. Docker is new and many people feel that it isn't ready for production yet. I think this post is one of the better explanations of many of the headaches I have encountered. Docker adds complexity to your overall environment, and is not going to magically solve any problems for you in any way. (Problems can be solved, but not by magic) If you don't already have a solid grasp on systems administration, managing a database server, backups, restores, and the like... this won't remove those headaches or learning curves either.

Now with the disclaimer taken care of... I believe that used properly, Docker eases many pains and can add significant benefit to your development and/or production environments.

Dockerfile

My Dockerfile, shown below, installs everything I need to be installed in my

PostgreSQL instances:

Beyond those componenents, it adds my customized pg_hba.conf and postgresql.conf files for use.

With these configuration files baked into the image it creates

a double-edged sword because it makes updating the configuration files difficult.

On the side of good, it makes me reasonably certain that my defined and tested

configuration in place for every instance.

On the other side, any change to the configuration requires that I rebuild and transfer

the Docker image to where I need it. This always seems cumbersome to me, but lukily I don't

need to reconfigure very often.

FROM ubuntu:14.04

MAINTAINER RustProof Labs <rustprooflabs@gmail.com>

RUN apt-get update \

&& apt-get install -y software-properties-common \

wget \

&& sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt trusty-pgdg main" >> /etc/apt/sources.list' \

&& wget --quiet -O - http://apt.postgresql.org/pub/repos/apt/ACCC4CF8.asc | sudo apt-key add - \

&& sudo apt-get update \

&& apt-get install -y postgresql-9.3-postgis-2.1 \

postgresql-9.3-postgis-scripts \

postgresql-9.3-pgrouting \

liblwgeom-dev \

&& apt-get clean \

&& apt-get autoclean \

&& apt-get autoremove

ADD pg_hba.conf /etc/postgresql/9.3/main/pg_hba.conf

ADD postgresql.conf /etc/postgresql/9.3/main/postgresql.conf

RUN chown postgres /etc/postgresql/9.3/main/pg_hba.conf && \

chown postgres /etc/postgresql/9.3/main/postgresql.conf

USER postgres

RUN /etc/init.d/postgresql start && \

psql -c "CREATE USER admin_user WITH SUPERUSER PASSWORD 'changemeToSomethingBetter';" && \

psql -c "CREATE DATABASE admin_user OWNER admin_user TEMPLATE template0 ENCODING 'UTF8';"

EXPOSE 5432

VOLUME ["/etc/postgresql", "/var/log/postgresql", "/var/lib/postgresql"]

WORKDIR /var/lib/postgresql

# Set the default command to run when starting the container

CMD ["/usr/lib/postgresql/9.3/bin/postgres", "-D", "/var/lib/postgresql/9.3/main", "-c", "config_file=/etc/postgresql/9.3/main/postgresql.conf"]

pg_hba.conf

The pg_hba.conf file below is very similar to what I use across the board.

Notice that I'm allowing all user names to be used at all addresses. Because

PostgreSQL is running inside Docker, and I want to be able to access the database

from outside that particular container, I must allow all IP addresses to connect.

Access is limited to the service when I bind ports at runtime by only allowing specific

IP addresses to access the exposed port.

Another piece to pay attention to is that I'm allowing only two types of connections:

- Local Socket

- SSL (remote)

This greatly improves security by requiring all remote connections to use SSL. This reduces the chance that a malicious user on the same network could sniff out the connection parameters used to gain access to the server.

# This file has been Heavily Reduced/Modified

###################################

## Use at your own risk! ##

###################################

# TYPE DATABASE USER ADDRESS METHOD

local all postgres peer

local all all md5

hostssl all all all md5

Unfortunately, I have recently found two different products that do not support SSL Required connections with PostgreSQL. You should test any product you're evaluating to ensure it can connect using SSL Required for the sake of your security!

postgresql.conf

The postgresql.conf file below is fairly standard with most of the original

comments removed. I have enabled

pg_stat_statements

to allow me to track query performance and make performance tuning an easier

task. Like in the pg_hba.conf file above, I've set listen_addresses to

allow connections from any IP.

This probably isn't the best way to accomplish this, but it's been my solution.

# This file has been Heavily Reduced/Modified

###################################

## Use at your own risk!

###################################

data_directory = '/var/lib/postgresql/9.3/main'

hba_file = '/etc/postgresql/9.3/main/pg_hba.conf'

ident_file = '/etc/postgresql/9.3/main/pg_ident.conf'

external_pid_file = '/var/run/postgresql/9.3-main.pid'

# Required since all connections are "external" via Docker

listen_addresses = '*'

port = 5432

max_connections = 50

unix_socket_directories = '/var/run/postgresql'

bonjour = off

ssl = true

ssl_cert_file = '/etc/ssl/certs/ssl-cert-snakeoil.pem'

ssl_key_file = '/etc/ssl/private/ssl-cert-snakeoil.key'

password_encryption = on

shared_buffers = 128MB

temp_buffers = 8MB

log_line_prefix = '%t [%p-%l] %q%u@%d '

log_timezone = 'UTC'

stats_temp_directory = '/var/run/postgresql/9.3-main.pg_stat_tmp'

datestyle = 'iso, mdy'

timezone = 'UTC'

lc_messages = 'C'

lc_monetary = 'C'

lc_numeric = 'C'

lc_time = 'C'

default_text_search_config = 'pg_catalog.english'

shared_preload_libraries = 'pg_stat_statements'

track_activity_query_size = 2048

pg_stat_statements.track = all

Build PostgreSQL 9.3 Image

With the Dockerfile and configuration files in place it is time to build the Docker image. The command below assumes you are already in the working directory containing the Dockerfile and config files above.

$ sudo docker build --no-cache -t rustprooflabs/postgresql93 .

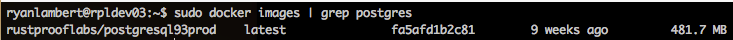

As you can see in the screen shot below this generates a 481 MB image.

I use the --no-cache switch because I ran into issues like what's described in

this post.

While this increases build times, it prevents me from scratching my head trying to

figure out why the changes I clearly made didn't make it to the final image.

Moving Docker Images around

I have a dedicated server that is responsible for building Docker images, so

after the images are built they have to go somewhere else so I can use them on

other machines.

I don't really use the Docker Registry yet,

because I don't want my production images available publicly, and I can't justify

the cost of a private hosted registry. Instead, I pass my images

around in gzipped tarballs by taking advantage of the docker save

and docker load commands.

Save Docker Image

I use these commands to save and prepare the image for transfer.

$ sudo docker save rustprooflabs/postgresql93 > postgresql93.tar

$ gzip postgresql93.tar

Use docker save

to save a current image as a .tar file.

I choose to gzip the file to save on bandwidth;

the originally saved .tar file was 473 MB while the .gz version weighed in

at 162 MB. It's worth trimming 66% of the file size.

Percent differences are commonly miscalculated, so for reference:

(current-prior) /prior=% diff

Our example: (162 MB - 473 MB) / 473 MB = -0.658 = -66%

Transfer Image

I typically use a simple scp command to move files around.

$ scp postgresql93.tar.gz user@server:~/

Load Docker Image

After the image has been transferred to the target machine, decompress

the file and load the image into Docker using

docker load.

$ gunzip postgresql93.tar.gz

$ sudo docker load < postgresql93.tar

Running the PostgreSQL Container

It's finally time to test the container and run PostgreSQL. Notice that on the host side

I'm only allowing localhost to connect to port 5567, which maps to the standard PostgreSQL

port 5432. If you need to allow connections to be made from any IP,

simply remove 127.0.0.1:.

sudo docker run -d --name=DEMO_postgres93 -p 127.0.0.1:5567:5432 rustprooflabs/postgresql93

Another option, to run in interactive mode for development or testing purposes:

sudo docker run -it -p 5567:5432 rustprooflabs/postgresql93

One headache with Docker is garbage collection, but in development

environments where the data does not need to persist when the

container is stopped feel free to add --rm to automatically remove

the container when it's stopped.

Warning: Using

--rmwill cause data loss if you haven't taken other steps to persist it. Use at your own risk.

Change Superuser Password

Now that we have a shiny new PostgreSQL server running in Docker, the first thing to do

should be to change the superuser's password. Notice how I'm using psql on the host

machine to connect to PostgreSQL inside Docker. Just think of it as an external server!

psql -U admin_user -h localhost -p 5567 -c "ALTER ROLE admin_user WITH PASSWORD 'NewPasswordHere';"

Run Commands

Now I'll use psql to create a simple table and insert some data.

psql -U admin_user -h localhost -p 5567 -d admin_user -c "CREATE TABLE test (id INT);"

psql -U admin_user -h localhost -p 5567 -d admin_user -c "INSERT INTO test VALUES (1), (2), (3);"

Database Backups and Restore

I use the same backup and restore methods I have used

for PostgreSQL for some time now. To take a backup with pg_dump:

pg_dump -U admin_user -h localhost -p 5567 -d admin_user -f db_backup.sql

Restore a backup using psql:

psql -U admin_user -h localhost -p 5567 -d admin_user < db_backup.sql

An untested backup is not a backup.

How I use PostgreSQL: Before and After

Before Dockerizing PostgreSQL, I had an instance of PostgreSQL installed on each server I needed it on. Production servers were configured one way, development servers typically used default configurations because it was quick and easy. Each instance had one or more database in it, depending on that server's role. This worked well for a while but I had two major headaches.

- Installing PostgreSQL 9.3 + PostGIS 2.1 on Debian

- Database Independence

Installing Everything

Long ago, I chose Debian 7 as my OS of choice for production. It's stable, secure, runs well, and met my needs. The downside is now I'm using more "cutting-edge" software, like PostGIS, and installing Postgis 2.1 along with PostgreSQL 9.3 became a headache. An easy solution was to install them using Ubuntu 14.04 which makes more recent packages easier to install by default.

By using Docker, I can take advantage of Ubuntu's more recent software packages while still using Debian as the base OS for production servers. Now the base OS isn't as important. I can even deploy a new PostgreSQL instance on Windows machine as long as Docker was available!

Database Independence

This headache really came about when I started working with spatial data. Spatial data is big and only gets bigger. As I started working more with PostGIS my database backups, scheduled daily, started getting bigger and bigger. And bigger. Most of the size was in a single PostGIS enabled database that does not have daily updates. My other, more actively updated databases have backups that are measured in MB, but the full backups grew quickly into double-digit GB. By putting each database in it's own instance, it allows me to more easily schedule custom backup schedules per database easily.

Another side effect was that the original disk I set the server up with quickly filled up. The temporary solution was to create a new tablespace but that created its own issues and made the database more server dependent. By placing databases to separate containers, it's much easier to move a single large database to a new server or disk with more room, while leaving the other smaller databases unaffected.

Summary

By Dockerizing my PostgreSQL needs, I have opened new doors in the realm of development and testing. I used to worry about having to redeploy my test database instances because I didn't want to go through the initial setup. Now, whenever I think, "Ooh, I should try..." I just spool up a new container and experiment away. Here's my list of Pros and Cons in no specific order.

Pros

- I am now very practiced at backups and restores

- Easier to test backups - Spool up new container and load

- Non-Standard and changing port numbers -- Benefits security

- Each DB is isolated from other DBs

- Easy to spool up development/test databases

- Much easier to run different versions of PostgreSQL

Cons

- Added complexity

- Takes extra work to get COPY command (and others that require local file system access) to function properly.

- Possible performance hit?

- Potential disk usage issues - Orphan Docker Images and/or Containers

My overall loads might be less demanding than many enterprise-level RDMSs, I believe my expectations are higher. And Docker has delivered.